Test point analysis (TPA) makes it possible to estimate a system test or acceptance test in an objective manner.

Development testing is an implicit part of the development estimate and is therefore outside the scope of TPA. To apply

TPA, the scope of the information system must be known. To this end, the results of a function point analysis (FPA) are

used. FPA is a method that makes it possible to make a technology-independent measurement of the scope of the

functionality provided by an automated system, and using the measurement as a basis for productivity measurement,

estimating the required resources, and project control. The productivity factor in function point analysis does include

the development tests, but not the acceptance and system tests.

Test point analysis can also be used if the number of test hours to be invested is determined in advance. By executing

a test point analysis, any possible risks incurred can be demonstrated clearly by comparing the objective test point

analysis estimate with the number of test hours determined in advance. A test point analysis can also be used to

calculate the relative importance of the various functions, based on which the available test time can be used as

optimally as possible. Test point analysis can also be used to create a global test estimate at an early stage (see Test Point Analysis At An Early Stage).

Philosophy

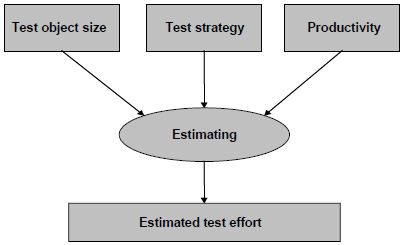

When establishing a test estimate in the framework of an acceptance or system test, three elements play a role

(see Figure 1: Estimating basic elements):

-

The size of the information system that is to be tested.

-

The test strategy (which object parts and quality characteristics must be tested and with what thoroughness, what

level of depth?).

-

The productivity.

The first two elements together determine the size of the test to be executed (expressed as test points). A test

estimate in hours results if the number of test points is multiplied by the productivity (the time required to execute

a specific test depth level). The three elements are elaborated in detail below.

Figure 1: Estimating basic elements

Size

Size in this context means the size of the information system. In test point analyses the figure for this is

based primarily on the number of function points. A number of additions and/or adjustments must be made in order to arrive

at the figure for the test point analysis. This is because a number of factors can be distinguished during testing that do

not or barely play a part when determining the number of function points, but are vital to testing.

These factors are:

-

Complexity - How many conditions are present in a function? More conditions almost automatically means more test

cases and therefore greater test effort.

-

System impact - How many data collections are maintained by the function and how many other functions use them?

These other functions must also be tested if this maintenance function is modified.

-

Uniformity - Is the structure of a function of such a nature that existing test specifications can be reused with

no more than small adjustments. In other words, are there multiple functions with the same structure in the

information system?

Test strategy

During system development and maintenance, quality requirements are specified for the information system.

During testing, the extent to which the specified quality requirements are complied with must be established.

However, there is never an unlimited quantity of test resources and test time. This is why it is important to relate

the test effort to the expected product risks. We use a product risk analysis to establish, among other things, test

goals, relevant characteristics per test goal, object parts to be distinguished per characteristic, and the risk class

per characteristic/object part. The result of the product risk analysis is then used to establish the test strategy.

A combination of a characteristic/object part from a high risk class will often require heavy-duty, far-reaching

tests and therefore a relatively great test effort when translated to the test strategy. The test strategy represents

input for the test point analysis. In test point analysis, the test strategy is translated to the required test

time.

In addition to the general quality requirements of the information system, there are differences in relation to the

quality requirements between the various functions. The reliable operation of some functions is vital to the business

process. The information system was developed for these functions. From a user’s perspective, the function that is used

intensively all day may be much more important than the processing function that runs at night. There are therefore two

(subjective) factors per function that determine the depth: the user importance of the function and the intensity of

use. The depth, as it were, indicates the level of certainty or insight into the quality that is required by the

client. Obviously the factors user importance and intensity of use are based on the test strategy.

The test strategy tells us which combinations of characteristic/object part must be tested with what thoroughness.

Often, a quality characteristic is selected as characteristic. The test point analysis also uses quality

characteristics, which means that it is closely related to the test strategy and generally is performed

simultaneously in actual practice.

Linking TPA parameters to test strategy risk classes

TPA has many parameters that determine the required number of hours. The risk classes from the test strategy can be

translated readily to these parameters. Generally, the TPA parameters have three values, which can then be linked to

the three risk classes from the test strategy (risk classes A, B and C).

If no detailed information is available to divide the test object into the various risk classes, the following division

can be used:

-

25% risk class A

-

50% risk class B

-

25% risk class C.

This division must then be used as the starting point for a TPA.

Productivity

Using this concept is not new to people who have already made estimates based on function points. Productivity

establishes the relation between effort hours and the measured number of function points in function point analysis.

For test point analysis, productivity means the time required to realise one test point, determined by the size of the

information system and the test strategy. Productivity consists of two components: the skill factor and the environment

factor. The skill factor is based primarily on the knowledge and skills of the test team. As such, the figure is

organisation and even person-specific. The environment factor shows the extent to which the environment has an impact

on the test activities to which the productivity relates. This involves aspects such as the availability of test tools,

experience with the test environment in question, the quality of the test basis, and the availability of testware, if

any.

Overall operation

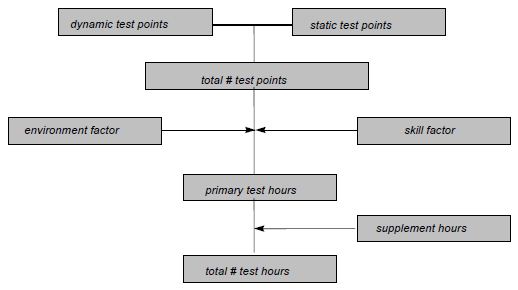

Schematically, this is how test point analysis works:

Figure 2: Schematic representation of test point analysis

Based on the number of function points per function, the function-dependent factors (complexity, impact, uniformity,

user importance and intensity of use), and the quality requirements and/or test strategy relating to the quality

characteristics that must be measured dynamically, the number of test points that is necessary to test the dynamically

measurable quality characteristics is established per function (dynamically measurable means that an opinion can be

realised on a specific quality characteristic by executing programs).

Adding these test points over the functions results in the number of dynamic test points.

Based on the total number of function points of the information system and the quality requirements and/or test

strategy relating to the static quality characteristics, the number of test points that is necessary to test the

statically measurable quality characteristics is established (static testing: testing by verifying and investigating

products without executing programs). This results in the number of static test points.

The total number of test points is realised by adding the dynamic and static test points.

The primary test hours are then calculated by multiplying the total number of test points by the calculated environment

factor and the applicable skill factor. The number of primary test hours represents the time necessary to execute the

primary test activities. In other words, the time that is necessary to execute the test activities for the phases

Preparation, Specification, Execution and Completion of the TMap life cycle.

The number of hours that is necessary to execute secondary test activities from the Control and Setting up and

maintaining infrastructure phases (additional hours) is calculated as a percentage of the primary test hours.

Finally, the total number of test hours is obtained by adding the number of additional hours to the number of primary

test hours. The total number of test hours is an estimate for all TMap test activities, with the exception of creating

the test plan (Planning phase).

Principles

The following principles apply in relation to test point analysis:

-

Test point analysis is limited to the quality characteristics that are 'measurable’. Being measurable means that a

test technique is available for the relevant quality characteristic. Moreover, sufficient practical

experience must be available in relation to this test technique in terms of the relevant quality

characteristic to make concrete statements about the required test effort.

-

Not all possible quality characteristics that may be present are included in the current version of test point

analysis. Reasons for this vary – there may be no concrete test technique available (yet), or there may be

insufficient practical experience with a test technique and therefore insufficient reliable metrics available. Any

subsequent version of test point analysis may include more quality characteristics.

-

In principle, test point analysis is not linked to a person. In other words, different persons executing a test

point analysis on the same information system should, in principle, create the same estimate. This is achieved by

letting the client determine all factors that cannot be classified objectively and using a uniform classification

system for all factors that can.

-

Test point analysis can be performed if a function point count according to IFPUG [IFPUG, 1994] is available; gross

function points are used as the starting point.

-

Test point analysis does not consider subject matter knowledge as a factor that influences the required quantity of

test effort in test point analysis. However, it is of course important that the test team has a certain level of

subject matter knowledge. This knowledge is a precondition that must be complied with while creating the test plan.

-

Test point analysis assumes one complete retest on average when determining the estimate. This average is a

weighted average based on the size of the functions, expressed as test points.

From COSMIC full function points ( CFFP) to function points (FP)

To estimate the project size, the COSMIC (COmmon

Software Measurement International Consortium) Full Function Points (CFFP) approach is used more and more often in

addition to the Function Point Analysis (FPA) approach [Abran, 2003]. FPA was created in a period in which only a

mainframe environment existed and moreover relies heavily on the relationship between functionality and the data model.

However, CFFP also takes account of other architectures, like client server and multi-tier, and development methods

like objected oriented, component based, and RAD.

The following rule of thumb can be used to convert CFFPs to function points (FPs):

-

If CFFP < 250 : FP = CFFP

-

If 250 ≤ CFFP ≤ 1000 : FP = CFFP / 1.2

-

If CFFP > 1000 : FP = CFFP / 1.5

|